This article describes how to register and use voice commands to start your Universal Windows Phone app, and how to continue the conversation within your app. The attached sample app comes with the following features:

- It registers a set of voice commands with Cortana,

- it recognizes a simple ‘open the door’ command,

- it discovers whether it was started by voice or by text,

- it recognizes a natural ‘take over‘ command, whit lots of optional terms,

- it recognizes a complex ‘close a colored something’, where ‘something’ and ‘color’ come from a predefined list,

- it modifies one of these lists programmatically,

- it requests the missing color when you ask it to ‘close something’, and

- it comes with an improved version of the Cortana-like SpeechInputBox control.

Here are some screenshots of this app. It introduces my new personal assistant, named Kwebbel (or in English ‘Kwebble’):

Kwebble is written as a Universal app, I dropped the Windows app project because Cortana is not yet available on that platform. I you prefer to stick to Silverlight, check the MSDN Voice Search sample.

Registering the Voice Command Definitions

Your app can get activated by voice only after it registered its call sign and its list of commands with Cortana. This is done through an XML file with Voice Command Definitions (VCD). It contains different command sets – one for each language that you want to support. This is how such a command set starts: with the command prefix (“Kwebble”) and the sample text that will appear in the Cortana overview:

<!-- Be sure to use the v1.1 namespace to utilize the PhraseTopic feature -->

<VoiceCommands xmlns="http://schemas.microsoft.com/voicecommands/1.1">

<!-- The CommandSet Name is used to programmatically access the CommandSet -->

<CommandSet xml:lang="en-us" Name="englishCommands">

<!-- The CommandPrefix provides an alternative to your full app name for invocation -->

<CommandPrefix> Kwebble </CommandPrefix>

<!-- The CommandSet Example appears in the global help alongside your app name -->

<Example> Close the door. </Example>

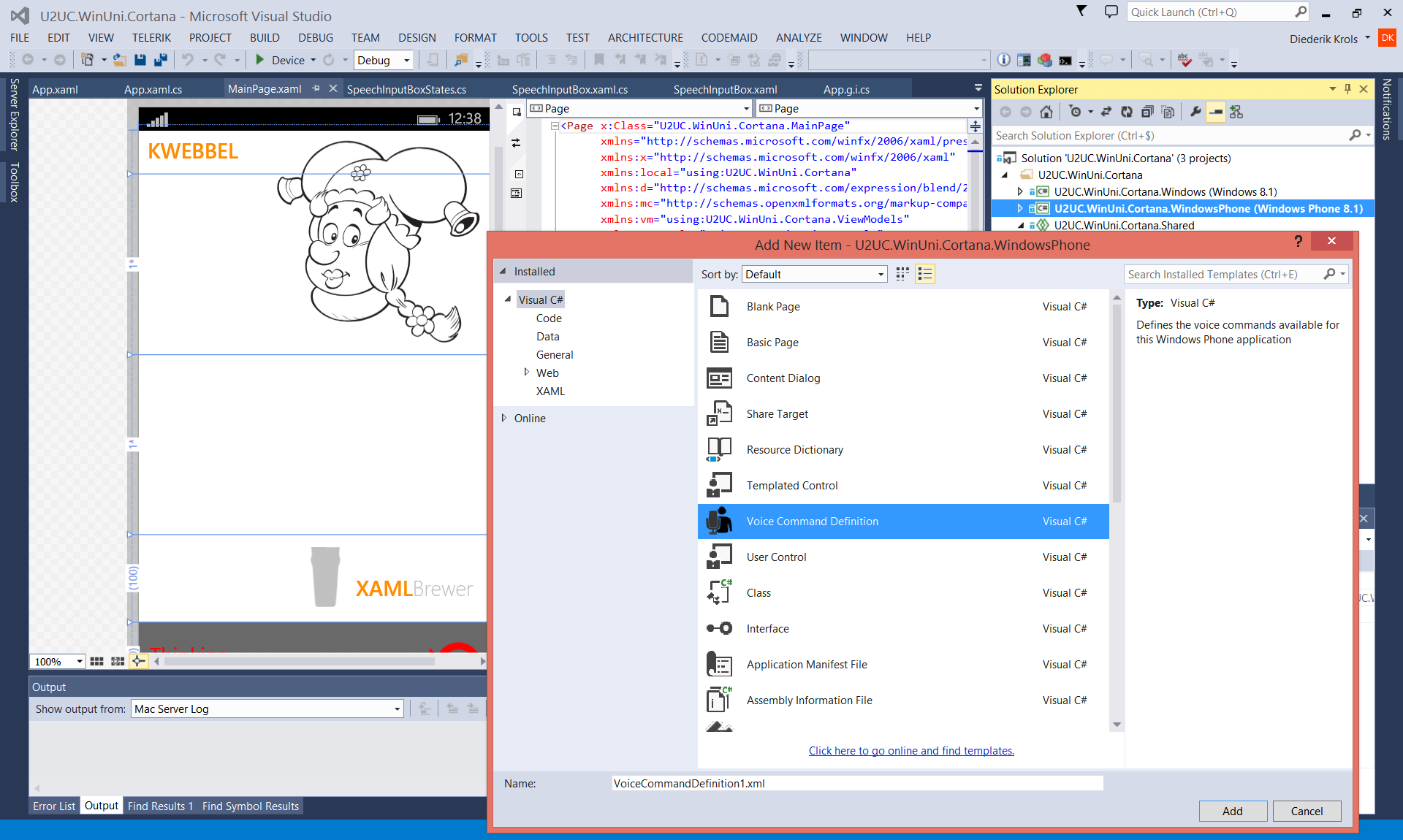

Later in this article, we cover the rest of the elements. You don’t have to write this from scratch, there’s a Visual Studio menu to add a VCD file to your project:

Here’s how to register the file through the VoiceCommandManager. I factored out all Cortana-related code in a static SpeechActivation class:

/// <summary>

/// Register the VCD with Cortana.

/// </summary>

public static async void RegisterCommands()

{

var storageFile = await StorageFile.GetFileFromApplicationUriAsync(new Uri("ms-appx:///Assets//VoiceCommandDefinition.xml"));

await VoiceCommandManager.InstallCommandSetsFromStorageFileAsync(storageFile);

}

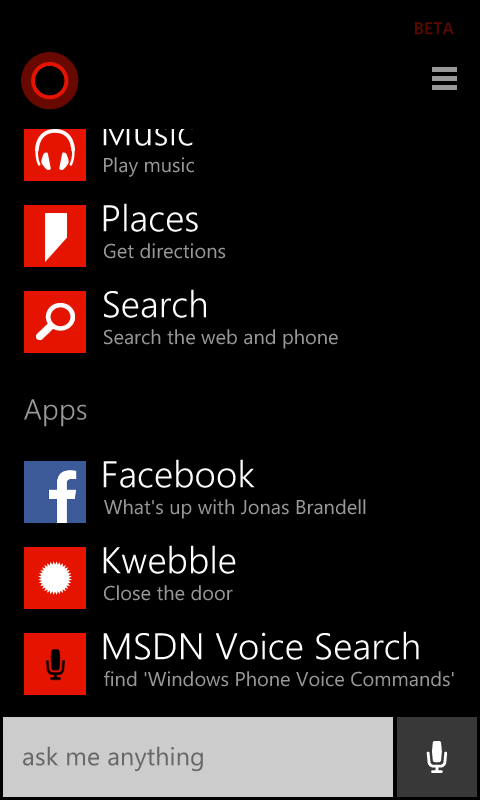

If your commands are registered and you ask Cortana “What can I say”, then the app is listed and the sample command is shown:

Universal apps cannot –yet- define the icon to be displayed in that list.

A simple command

Let’s take a look at the individual commands. Each command comes with

- Name: a technical name that you can use in your code,

- Example: an example that is displayed in the Cortana UI,

- One or more ListenFor elements: the text to listen for, the feedback from Cortana when she recognized the command, and

- Navigate: the page to navigate to when the app is activated through the command.

The Navigate element is required by the XSD, but it is used by Silverlight only: it is ignored by Universal apps.

Here’s an example of a very basic ‘open the door’ command, it’s just an exhaustive enumeration of the alternatives.

<Command Name="DoorOpen">

<!-- The Command example appears in the drill-down help page for your app -->

<Example> Door 'Open' </Example>

<!-- ListenFor elements provide ways to say the command. -->

<ListenFor> Door open </ListenFor>

<ListenFor> Open door </ListenFor>

<ListenFor> Open the door </ListenFor>

<!--Feedback provides the displayed and spoken text when your command is triggered -->

<Feedback> Opening the door ... </Feedback>

<!-- Navigate specifies the desired page or invocation destination for the Command-->

<!-- Silverlight only, WinRT and Universal apps deal with this themselves. -->

<!-- But it's mandatory according to the XSD. -->

<Navigate Target="OtherPage.xaml" />

</Command>

Here you see the list of commands in the Cortana UI, and the feedback:

Since ‘Kwebble’ is not really an English word, Cortana has a problem recognizing it. I’ve seen the term being resolved into ‘Grebel’ (as in the screenshot), ‘Pueblo’, ‘Devil’ and very often ‘Google’. But anyway, ‘something that sounds like ‘Kwebble’ followed by ‘open the door’ starts the app appropriately. Strangely enough that’s not what happens with text input. If I type ‘Kwebbel close the door’ –another valid command- the app’s identifier is not recognized and I’m redirected to Bing:

How the app reacts

A Universal app can determine if it is activated by Cortana in the OnActivated event of its root App class. If the provided event argument is of the type VoiceCommandActivatedEventArgs, then Cortana is responsible for the launch:

protected override void OnActivated(IActivatedEventArgs args)

{

var rootFrame = EnsureRootFrame();

// ...

base.OnActivated(args);

#if WINDOWS_PHONE_APP

Services.SpeechActivation.HandleCommands(args, rootFrame);

#endif

// Ensure the current window is active

Window.Current.Activate();

}

/// <summary>

/// Verify whether the app was activated by voice command, and deal with it.

/// </summary>

public static void HandleCommands(IActivatedEventArgs args, Frame rootFrame)

{

if (args.Kind == ActivationKind.VoiceCommand)

{

VoiceCommandActivatedEventArgs voiceArgs = (VoiceCommandActivatedEventArgs)args;

// ...

}

}

The Result property of this VoiceActivatedEventArgs is a SpeechRecognitionResult instance that contains detailed information about the command. In Silverlight there’s a RuleName property that references the command. In Universal apps this is not there. I was first tempted to parse the full Text to figure out what command was spoken or typed, but that would become rather complex for the more natural commands. It’s easier and safer to walk through the RulePath elements – the list of rule identifiers that triggered the command. Here’s another code snippet from the sample app, the ‘Take over’ command guides us to the main page, the other commands bring us to the OtherPage. We conveniently pass the event arguments to the page we’re navigating to, so it can also access the voice command:

// First attempt:

// if (voiceArgs.Result.Text.Contains("take over"))

// Better:

if (voiceArgs.Result.RulePath.ToList().Contains("TakeOver"))

{

rootFrame.Navigate(typeof(MainPage), voiceArgs);

}

else

{

rootFrame.Navigate(typeof(OtherPage), voiceArgs);

}

Optional words in command phrases

The ListenFor elements in the command phrases may contain optional words. These are wrapped in square brackets. Here’s the TakeOver command from the sample app. It recognizes different natural forms of the ‘take over’ command, like ‘would you please take over’ and ‘take over my session please’:

<Command Name="TakeOver">

<!-- The Command example appears in the drill-down help page for your app -->

<Example> Take over </Example>

<!-- ListenFor elements provide ways to say the command, including [optional] words -->

<ListenFor> [would] [you] [please] take over [the] [my] [session] [please]</ListenFor>

<!--Feedback provides the displayed and spoken text when your command is triggered -->

<Feedback> Thanks, taking over the session ... </Feedback>

<!-- Navigate specifies the desired page or invocation destination for the Command-->

<!-- Silverlight only, WinRT and Universal apps deal with this themselves. -->

<!-- But it's mandatory according to the XSD. -->

<Navigate Target="MainPage.xaml" />

</Command>

When the command is fired, Kwebble literally takes over and starts talking. Since the VoiceCommandActivated event argument was passed from the app to the page, the page can further analyze it to adapt its behavior:

/// <summary>

/// Invoked when this page is about to be displayed in a Frame.

/// </summary>

protected async override void OnNavigatedTo(NavigationEventArgs e)

{

if (e.Parameter is VoiceCommandActivatedEventArgs)

{

var args = e.Parameter as VoiceCommandActivatedEventArgs;

var speechRecognitionResult = args.Result;

// Get the whole command phrase.

this.InfoText.Text = "'" + speechRecognitionResult.Text + "'";

// Find the command.

foreach (var item in speechRecognitionResult.RulePath)

{

this.InfoText.Text += ("\n\nRule: " + item);

}

// ...

if (speechRecognitionResult.RulePath.ToList().Contains("TakeOver"))

{

await this.Dispatcher.RunAsync(

Windows.UI.Core.CoreDispatcherPriority.Normal,

() => Session_Taken_Over(speechRecognitionResult.CommandMode()));

}

}

}

Detecting the command mode

If the app was started through a spoken Cortana command, it may start talking. If the command was provided in quiet mode -typed in the input box through the keyboard- then the app should also react quietly. You can figure out the command mode –Voice or Text- by looking it up in the Properties of the SemanticInterpretation of the speech recognition result. Here’s a static method that returns the command mode for a speech recognition result:

/// <summary>

/// Returns how the app was voice activated: Voice or Text.

/// </summary>

public static CommandModes CommandMode(this SpeechRecognitionResult speechRecognitionResult)

{

var semanticProperties = speechRecognitionResult.SemanticInterpretation.Properties;

if (semanticProperties.ContainsKey("commandMode"))

{

return (semanticProperties["commandMode"][0] == "voice" ? CommandModes.Voice : CommandModes.Text);

}

return CommandModes.Text;

}

/// <summary>

/// Voice Command Activation Modes: speech or text input.

/// </summary>

public enum CommandModes

{

Voice,

Text

}

Here’s how the sample app reacts to the ‘take over’ command. In Voice mode it loads an SSML document and starts talking, in Text mode it just updates the screen:

if (mode == CommandModes.Voice)

{

// Get the prepared text.

var folder = Windows.ApplicationModel.Package.Current.InstalledLocation;

folder = await folder.GetFolderAsync("Assets");

var file = await folder.GetFileAsync("SSML_Session.xml");

var ssml = await Windows.Storage.FileIO.ReadTextAsync(file);

// Say it.

var voice = new Voice(this.MediaElement);

voice.SaySSML(ssml);

}

else

{

// Only update the UI.

this.InfoText.Text += "\n\nBla bla bla ....";

}

Here’s a screenshot of the app in both modes (just images, no sound effects):

Natural command phrases

Do not assume that the user will try to launch your app by just saying the call sign and a command name: “computer, start simulation” is so eighties. Modern speech recognition API’s can deal very well with natural language and Cortana is no exception. The Voice Definition Command file can have more than just fixed and optional words (square brackets), it can also deal with so-called phrase lists and phrase topics. These are surrounded with curly brackets. The Kwebble app uses a couple of phrase lists. For an example of a phrase topic, check the already mentioned MSDN Voice Commands Quick start. The following command recognizes the ‘close’ action, followed by a color from a list, followed by the thing to be closed, also from a list. This ‘close’ command will be triggered by phrases like ‘close the door’, ‘close the red door’, and ‘close the yellow window’.

<Command Name="Close">

<!-- The Command example appears in the drill-down help page for your app -->

<Example> Close door </Example>

<!-- ListenFor elements provide ways to say the command, including references to

{PhraseLists} and {PhraseTopics} as well as [optional] words -->

<ListenFor> Close {colors} {closables} </ListenFor>

<ListenFor> Close the {colors} {closables} </ListenFor>

<ListenFor> Close your {colors} {closables} </ListenFor>

<!--Feedback provides the displayed and spoken text when your command is triggered -->

<Feedback> Closing {closables} </Feedback>

<!-- Navigate specifies the desired page or invocation destination for the Command-->

<!-- Silverlight only, WinRT and Universal apps deal with this themselves. -->

<!-- But it's mandatory according to the XSD. -->

<Navigate Target="MainPage.xaml" />

</Command>

<PhraseList Label="colors">

<Item> yellow </Item>

<Item> green </Item>

<Item> red </Item>

<!-- Fake item to make the color optional -->

<Item> a </Item>

</PhraseList>

<PhraseList Label="closables">

<Item> door </Item>

<Item> window </Item>

<Item> mouth </Item>

</PhraseList>

You can add optional terms to the ListenFor elements so that sentences like ‘Would you be so kind to close your mouth, please?’ would also trigger the close command. What you can not do, is define a phrase list as optional. Square brackets and curly brackets cannot surround the same term. As a workaround I added a dummy color called ‘a’. The fuzzy recognition logic will map ‘close door’ to ‘close a door’ and put ‘a’ and ‘door’ in the semantic properties of the speech recognition result. Here’s how the sample app evaluates these properties to figure out how to proceed:

var semanticProperties = speechRecognitionResult.SemanticInterpretation.Properties;

// Figure out the color.

if (semanticProperties.ContainsKey("colors"))

{

this.InfoText.Text += (string.Format("\nColor: {0}", semanticProperties["colors"][0]));

}

// Figure out the closable.

if (semanticProperties.ContainsKey("closables"))

{

this.InfoText.Text += (string.Format("\nClosable: {0}", semanticProperties["closables"][0]));

}

Continuing the conversation inside the app

Cortana’s responsibilities stop when it started up your app via a spoken or typed command. If you want to continue the conversation (e.g. for asking more details) then you have to do this inside your app. When the Kwebble sample app is started with a ‘close the door’ command without a color, then she will request for the missing color and evaluate your answer. Here’s how she detects the command with the missing color (remember: ‘a’ is the missing color):

// Ask for the missing color, when closing the door.

if (speechRecognitionResult.RulePath[0] == "Close" &&

semanticProperties["closables"][0] == "door" &&

semanticProperties["colors"][0] == "a")

{

// ...

if (speechRecognitionResult.CommandMode() == CommandModes.Voice)

{

var voice = new Voice(this.MediaElement);

voice.Say("Which door do you want me to close?");

voice.Speaking_Completed += Voice_Speaking_Completed;

}

}

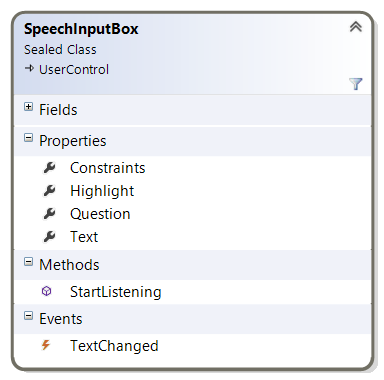

The Kwebble app comes with a Speech Input Box control, an improved version from the one I introduced in my previous blog post. I exposes its Text and its Constraints collection so you can change these. You can now fluently continue the conversation by triggering the ‘listening’ mode programmatically (skipping an obsolete Tap). And there’s more: I added the Cortana sound effect when the control starts listening.

Here’s what happens just before Kwebble ask you which door to close. The speech input box is prepared to recognize a specific answer to the question:

var storageFile = await StorageFile.GetFileFromApplicationUriAsync(new Uri("ms-appx:///Assets//SpeechRecognitionGrammar.xml"));

var grammarFileConstraint = new Windows.Media.SpeechRecognition.SpeechRecognitionGrammarFileConstraint(storageFile, "colors");

this.SpeechInputBox.Constraints.Clear();

this.SpeechInputBox.Constraints.Add(grammarFileConstraint);

this.SpeechInputBox.Question = "Which door?";

She only starts listening after she finished asking the question. Otherwise she starts listening to herself. Seriously, I am not kidding!

private void Voice_Speaking_Completed(object sender, EventArgs e)

{

this.SpeechInputBox.StartListening();

}

Natural phrases in the conversation

I already mentioned that the Voice Command Definitions for Cortana activation are quite capable of dealing with natural language, When you take over the conversation in your app, it gets even better. Using a SpeechRecognitionFileConstraint you can explain the SpeechInputBox (and the embedded SpeechRecognizer) the specific pattern to listen for, in Speech Recognition Grammar Specification (SRGS) XML format. When Kwebble asks you which door to close, she’s only interested in phrases that contain one of the door colors (red, green, yellow). Here’s the SRGS grammar that recognizes these, it just listens for color names, and ignores all the rest:

<grammar xml:lang="en-US"

root="colorChooser"

tag-format="semantics/1.0"

version="1.0"

xmlns="http://www.w3.org/2001/06/grammar">

<!-- The following rule recognizes any phrase with a color. -->

<!-- It's defined as the root rule of the grammar. -->

<rule id="colorChooser">

<ruleref special="GARBAGE"/>

<ruleref uri="#color"/>

<ruleref special="GARBAGE"/>

</rule>

<!-- The list of colors that are recognized. -->

<rule id="color">

<one-of>

<item>

red <tag> out="red"; </tag>

</item>

<item>

green <tag> out="green"; </tag>

</item>

<item>

yellow <tag> out="yellow"; </tag>

</item>

</one-of>

</rule>

</grammar>

Here are some screenshots from the conversation. Kwebbel recognizes the missing color, then asks for it and start listening for the answer; then she recognizes the color:

That’s all folks!

Here’s the full code of the sample app: the XAML, the C#, and last but not least the different powerful XML files. The solution was created with Visual Studio 2013 Update 3: U2UC.WinUni.Cortana.zip (164.6KB)

Enjoy!

XAML Brewer