This article explains how to create fast queries against a LexDB database in a Windows 8 Store app, and how to keep these queries fast. LexDB is a lightweight, in-process object database engine. It is written in C# and can be used in .NET, Silverlight, Windows Phone, Windows Store, and Xamarin projects. For an introduction to using the engine in a Windows 8 Store app, I refer to a previous blog article of mine.

LexDB is an object database, it is not as relational as e.g. SQLite. But it still comes with the possibility of indexing, and it has commands to reorganize the stored data and release storage. This article zooms in on these features. Here’s a screenshot of the attached sample app. It requests for the size of the Person table to measure, and it comes with buttons to trigger a database reorganization (when you notice that the queries’ performance goes down) and to start measuring a SELECT statement using different indexes:

The app is of course a port of my previous blog post on SQLite. It stores the same business object (Person):

/// <summary>

/// Represents a person.

/// </summary>

internal class Person

{

/// <summary>

/// Gets or sets the identifier.

/// </summary>

public int Id { get; set; }

/// <summary>

/// Gets or sets the name.

/// </summary>

public string Name { get; set; }

/// <summary>

/// Gets or sets the description.

/// </summary>

public string Description { get; set; }

/// <summary>

/// Gets or sets the status.

/// </summary>

public int Status { get; set; }

/// <summary>

/// Gets or sets the day of birth.

/// </summary>

public DateTime BirthDay { get; set; }

/// <summary>

/// Gets or sets the picture.

/// </summary>

public byte[] Picture { get; set; }

}

When I migrated the database code from SQLite to LexDB I immediately noticed that SELECT statements in LexDB are pretty fast, but INSERT and DELETE statements are an order of magnitude slower, and run slower as the table size increases. That’s definitely something to consider, but as long as you stick to asynchronous calls, and as long as your app does not do any bulk operations, it’s nothing to really worry about. The performance of INSERT and DELETE statements surely had an impact on my sample app: the original app simply recreated the table when the target size was changed. With LexDB this isn’t an option anymore: the app now adds only the missing objects, or removes them. This allows you to gradually build up a large test table. So when you set a new size for the table, please use relatively small increments (depending on your hardware): you’d be amazed how long it takes to insert 1000 new objects into the table!

Here’s the initialization of the database. It logs the whereabouts of the physical files so you can monitor these. And it creates a table with two indexes: an index only on name, and a ‘covering’ index on name and status:

static Dal()

{

// Reveal the location of the database folder

Debug.WriteLine(string.Format(@"Databases are stored at {0}\Lex.Db\.", ApplicationData.Current.LocalFolder.Path));

// Create database

db = new DbInstance("storage");

// Define table mapping

db.Map<Person>().Automap(p => p.Id, true).WithIndex("ix_Person_Name", p => p.Name).WithIndex("ix_Person_Name_Status", p => p.Name, p => p.Status);

// Initialize database

db.Initialize();

}

Here’s the benchmark query that retrieves a filtered list of persons:

public static List<Person> GetPersons()

{

return db.Table<Person>().Where(p => p.Name == "James" && p.Status == 2).ToList();

}

Since LexDB is an object database, any query against the Person table will return a list of fully populated Person instances. There’s no way to do any projection to return only Id, Name, and Status. [Maybe there is a way, but I didn’t find it. After all, the official documentation is a short series of blog posts and the source code.] All query plans will eventually end up in the base table.

If you want to use an index for a query, then you have to tell it to the engine upfront:

public static List<Person> GetPersonsIndex()

{

// Horrible performance

// return db.Table<Person>().IndexQueryByKey("ix_Person_Name", "James").ToLazyList().Where(p => p.Value.Status == 2).Select(p => p.Value).ToList();

return db.Table<Person>().IndexQueryByKey("ix_Person_Name", "James").ToList().Where(p => p.Status == 2).ToList();

}

The IndexQueryByKey returns the primary keys of the requested objects (WHERE name=?), and the query plan continues in the base table (file) to filter out the remaining objects (WHERE status=?). That’s why I didn’t notice any performance improvements: in most cases, the raw query ran even faster.

So unless you’re looking for a very scarce value, a regular index on a LexDB table will NOT be very helpful. The same is true of course in SQL Server: indexes with a low density will be ignored. But in a relational database it’s the engine that decides whether or not to use an index, here the decision is up to you.

So let’s verify the impact of a covering index. Mind that the term ‘covering’ here only applies to the WHERE-clause, since there’s no way to skip the pass through the base table:

public static List<Person> GetPersonsCoveringIndex()

{

return db.Table<Person>().IndexQueryByKey("ix_Person_Name_Status", "James", 2).ToList();

}

As you see in the above app screenshots, the ‘covered’ query runs faster than the original query in all cases. But on the other hand: the difference is not that significant and will probably not be noticed by the end users. I can imagine that the real added value of these indexes will appear in more complex queries (e.g. when joining multiple tables).

Let’s jump to the administrative part. The fragmentation caused by INSERT, UPDATE and DELETE statements has a bad influence on indexes: they get gradually slower over time. In most relational databases this is a relatively smooth process. It can be stopped by reorganizing or rebuilding the index. In LexDB this also happens, but the degradation is a less than smooth process. If you add and remove a couple of times a block of let’s say 1000 objects, then you’ll observe only subtle changes in the response time of the SELECT statements. Very suddenly the basic SELECT statement runs about ten times slower, while the indexed queries continue to do their job with the same response time.

At that moment, it’s time to rebuild the base table, and optionally release storage:

public static void Reorganize()

{

Debug.WriteLine("Before compacting:");

LogPersonInfo();

// Reorganizes the file (huge impact on performance).

db.Table<Person>().Compact();

// For the sake of completeness.

db.Flush<Person>();

db.Flush();

Debug.WriteLine("After compacting:");

LogPersonInfo();

}

private static void LogPersonInfo()

{

var info = db.Table<Person>().GetInfo();

Debug.WriteLine(string.Format("* Person Data Size: {0}", info.DataSize));

Debug.WriteLine(string.Format("* Person Effective Data Size: {0}", info.EffectiveDataSize));

Debug.WriteLine(string.Format("* Person Index Size: {0}", info.IndexSize));

}

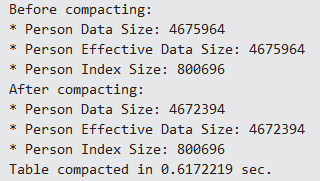

Immediately, everything is back to normal:

Compacting a fragmented table is a very rapid operation (less than a second) and it has an immediate result on the queries.

Compacting the data is something you may want to do on start-up, e.g. in an extended splash screen routine.

Even though LexDB is not as advanced as SQLite, it comes with the necessary infrastructure to get some speed and maintain it.

That’s all for today. Here’s the code, it was written in Visual Studio 2013 for Windows 8.1: U2UC.WinRT.LexDbIndexing.zip (852.7KB)

Enjoy!

Diederik