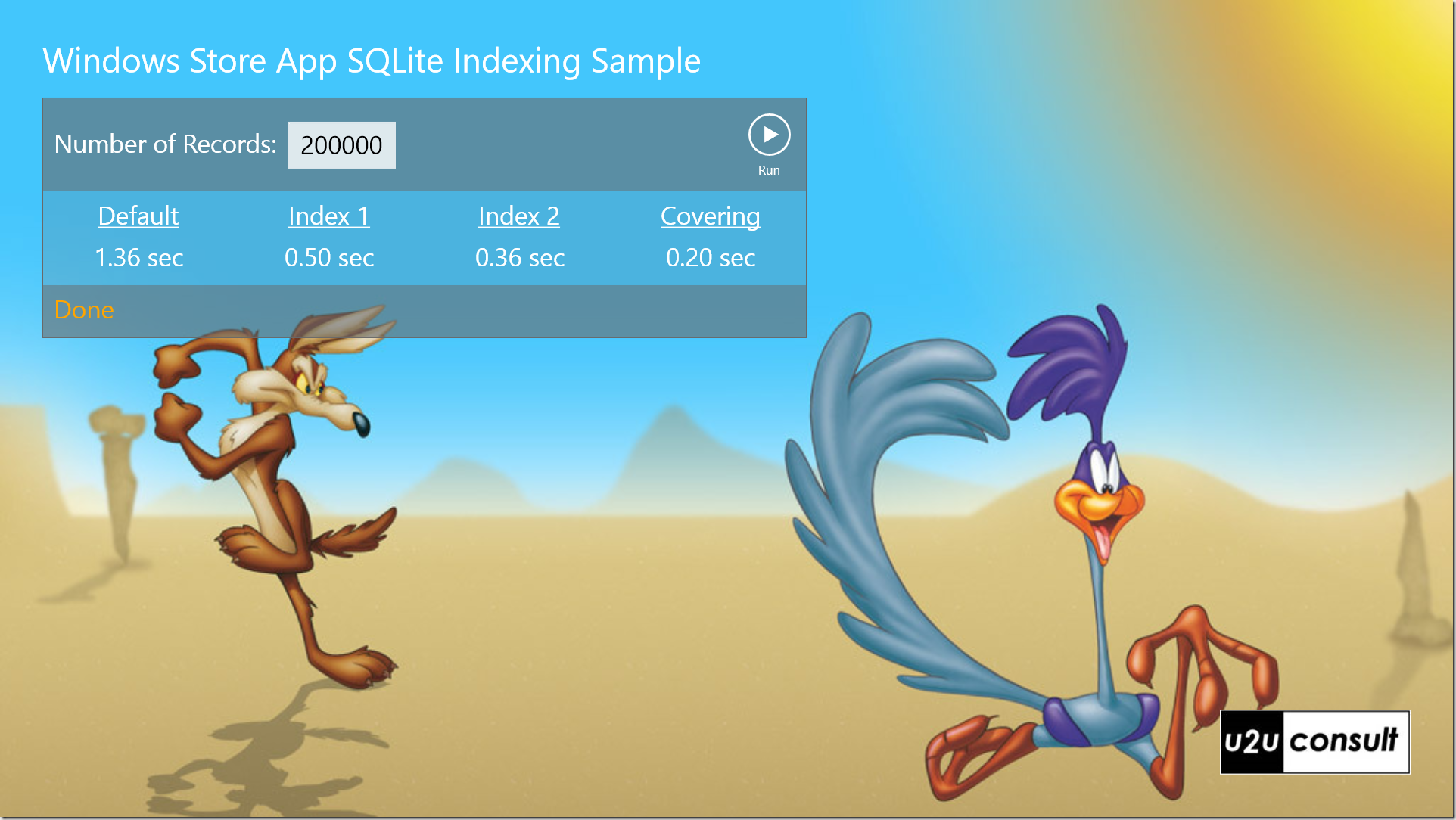

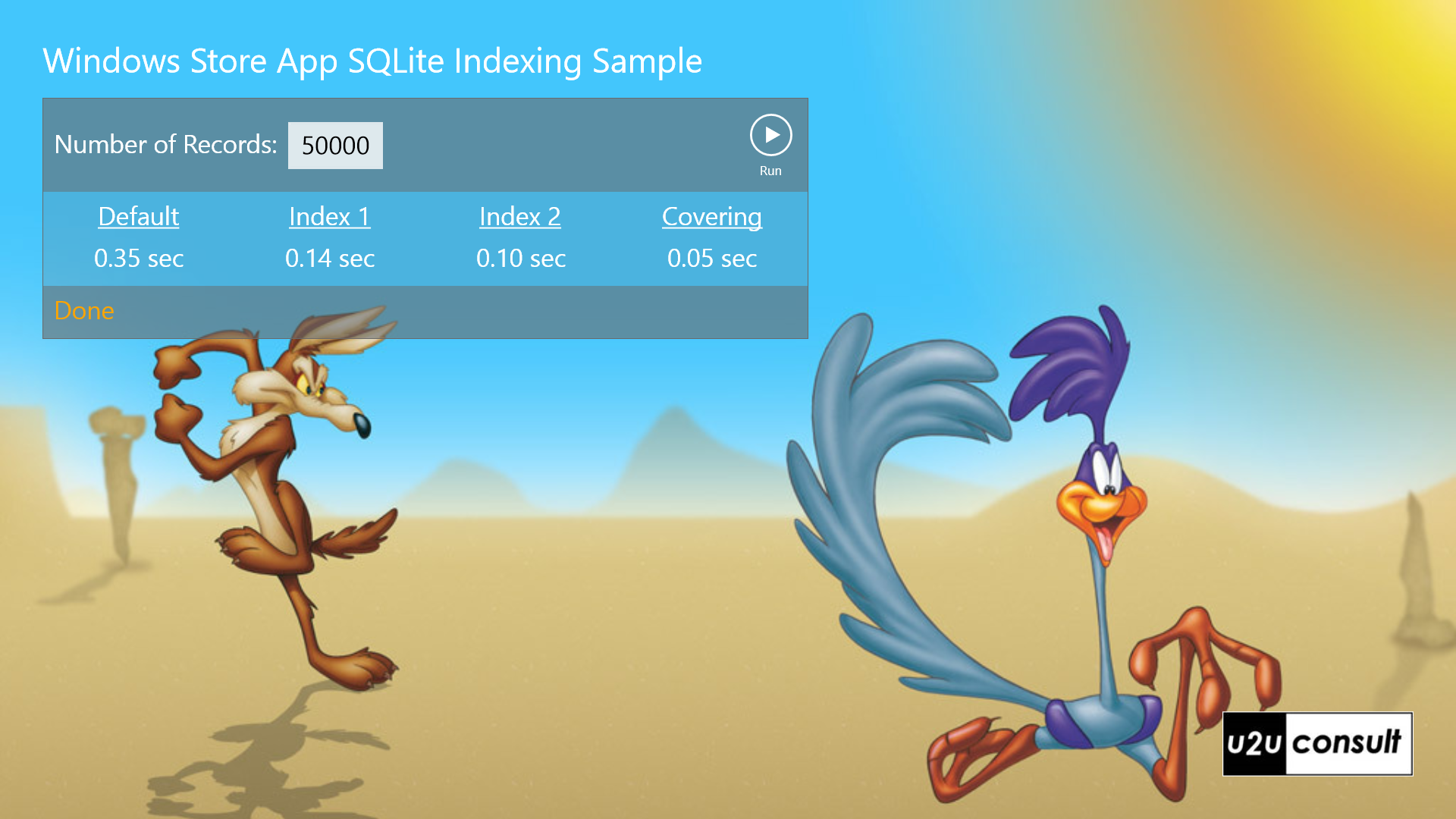

This article explains how to monitor and optimize a SQLite query in a Windows 8 Store app by adding indexes and/or rewriting the query. I’ll be using the WinRT SQLite wrapper from the Visual Studio Gallery. I assume that you know how to install and use it, but feel free to check a previous blog post of mine for an introduction. As usual I created a small app. It allows you to select the number of records to insert in a table of Persons. The app then measures the execution time of a SELECT statement against that table, using four different strategies. Here’s how the app looks like:

This is the definition of Person, a more or less representative business model class:

/// <summary>

/// Represents a person.

/// </summary>

internal class Person

{

/// <summary>

/// Gets or sets the identifier.

/// </summary>

[PrimaryKey, AutoIncrement]

public int Id { get; set; }

/// <summary>

/// Gets or sets the name.

/// </summary>

[MaxLength(64)]

public string Name { get; set; }

/// <summary>

/// Gets or sets the description.

/// </summary>

public string Description { get; set; }

/// <summary>

/// Gets or sets the status.

/// </summary>

/// <remarks>Is an enum in the viewmodel.</remarks>

public int Status { get; set; }

/// <summary>

/// Gets or sets the day of birth.

/// </summary>

public DateTime BirthDay { get; set; }

/// <summary>

/// Gets or sets the picture.

/// </summary>

/// <remarks>Is a blob in the database.</remarks>

public byte[] Picture { get; set; }

}

The query that we’re going to monitor uses projection (not all columns are fetched – no SELECT *) as well as filtering (not all rows are fetched – there’s a WHERE clause). We’re only interested in the Id, Name, and Status columns of the persons named James with a status of 2. I started with the following query:

public static List<Person> GetPersonsOriginal()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

var result = from p in db.Table<Person>()

where p.Name == "James" && p.Status == 2

select new Person() { Id = p.Id, Name = p.Name, Status = p.Status };

return result.ToList();

}

}

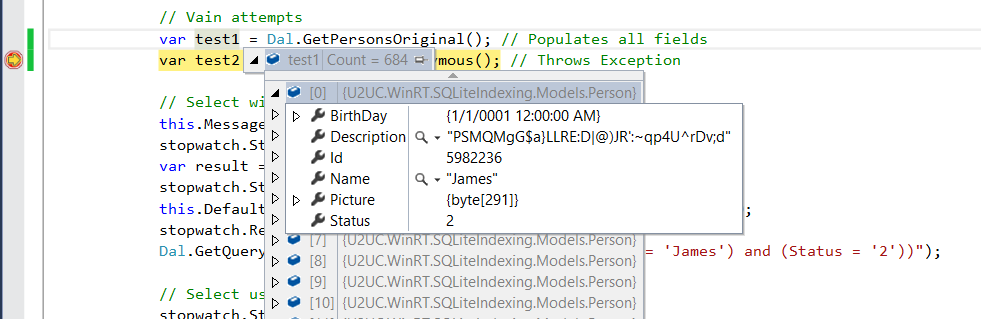

To my big surprise I noticed that the non-selected fields (e.g. Description and Image) were filled too in the result set. The projection step was clearly not executed:

Next, I tried to return an anonymous type:

public static List<object> GetPersonsAnonymous()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

var result = from p in db.Table<Person>()

where p.Name == "James" && p.Status == 2

select new { Id = p.Id, Name = p.Name, Status = p.Status };

return result.ToList<object>();

}

}

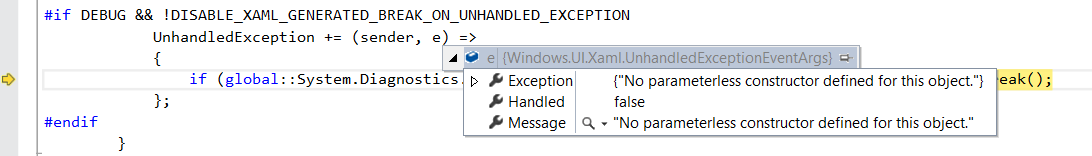

Unfortunately it threw an exception:

I should have known: the WinRT wrapper relies heavily on LINQ but the SQLite runtime itself is written in unmanaged C, so it’s allergic to anonymous .NET classes.

It turns out that when using the SQLite wrapper to run a query that returns a result set, you have to provide the signature of that result. The LINQ query calls Query<T> in the SQLite class and needs to provide the type parameter. Since I just needed a couple of columns, I created a Result class to host the query’s return values:

internal class Result

{

/// <summary>

/// Gets or sets the identifier.

/// </summary>

public int Id { get; set; }

/// <summary>

/// Gets or sets the name.

/// </summary>

public string Name { get; set; }

/// <summary>

/// Gets or sets the status.

/// </summary>

public int Status { get; set; }

}

Here’s how the second version of the query looks like:

public static List<Result> GetPersons()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

var result = from p in db.Table<Person>()

where p.Name == "James" && p.Status == 2

select new Result() { Id = p.Id, Name = p.Name, Status = p.Status };

return result.ToList();

}

}

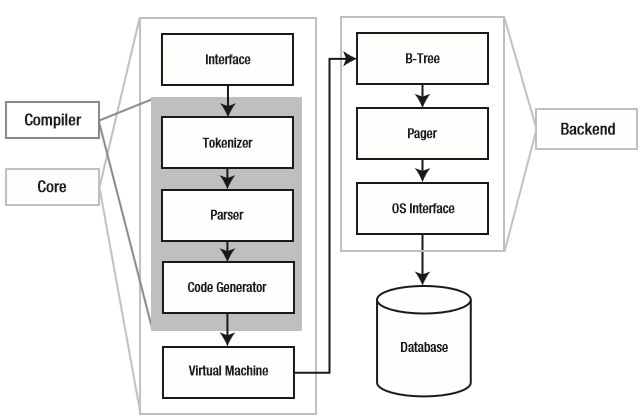

I must admit that -even for a very large table- the results come back very rapidly. I guess that most apps do not require any SQLite performance tuning. Asynchronous SELECT statements will suffice, and these are provided by the WinRT wrapper. SQLite is so fast because it is a genuine relational database: its data is not simply serialized, but stored and (proactively) cached in fixed-size pages that are hooked into B-trees (for indexes) or B+-trees (for tables). Here’s an overview of the internal mechanisms, the illustration comes out of the definitive guide to SQLite.

As a first optimization, I decorated the database with an index on the Name column:

public static void CreateIndex()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

db.Execute("CREATE INDEX `ix_person_name` ON `Person` (`Name` ASC)");

}

}

The query ran about three times faster. That’s not bad.

Then I added an index on all requested fields, a so-called covering index. Theoretically this is the fastest way to get the data: everything is found in the index itself, there’s no need to read the table. Here’s the covering index definition:

public static void CreateCoveringIndex()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

db.Execute("CREATE INDEX `ix_person_name_status` ON `Person` (`Name` ASC, 'Status' ASC)");

}

}

On average, this query runs four times faster than the original. It’s getting better.

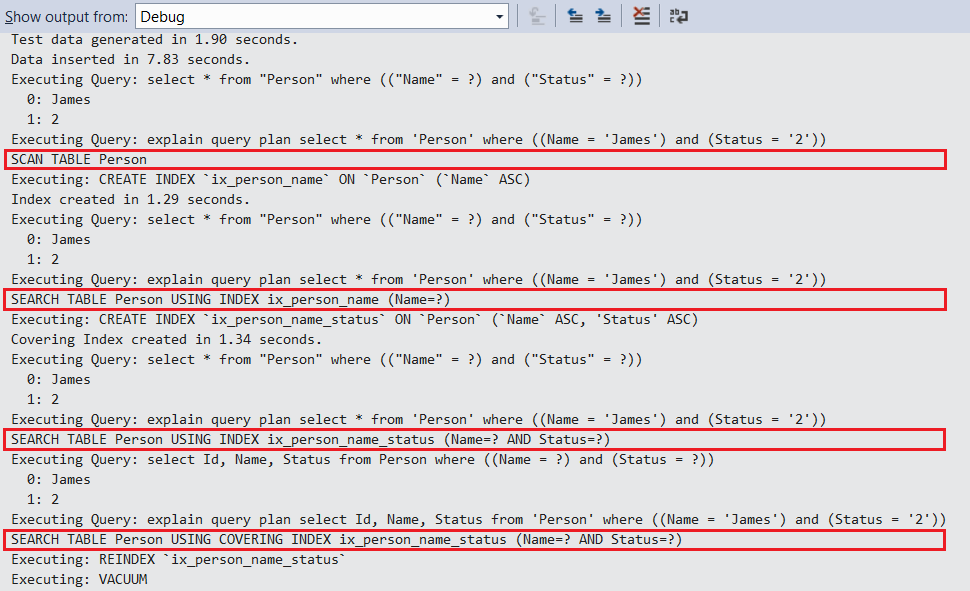

Then I activated the tracing and started to add the extra measurements, e.g. on the creation of the indexes. The database trace revealed the query for the so-called covering index:

select * from "Person" where (("Name" = ?) and ("Status" = ?))

That’s still a SELECT *, so the LINQ-based query was actually selecting ALL of the columns. It turns out that the SQLite wrapper calls Query<T> with the table type (Person) as T. I expected it would use the projected type (Result). That’s the reason why all fields were populated in the very first query. I wanted to measure a real covering index, so I decided to call Query<T> myself, with a custom query (SQL-based rather than LINQ-based) that fetches only the projected columns:

public static List<Result> GetPersons2()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

var result = db.Query<Result>("select Id, Name, Status from Person where ((Name = ?) and (Status = ?))", "James", 2);

return result.ToList();

}

}

[Instead of providing the Result type, I think I could have created a TableMapping programmatically. TableMapping smells like SQLite’s version of a strongly typed ADO.NET dataset. When I come to think of it: it would be nice to have a designer for this in Visual Studio.]

Anyway, this last query runs about five times faster than the original one, and the trace was not showing a SELECT * anymore:

select Id, Name, Status from Person where ((Name = ?) and (Status = ?))

But still I wanted to double check the used strategy: SQLite has the “EXPLAIN QUERY PLAN” syntax to reveal the actual query plan. After some vain calls, I decided to apply the same approach as for the custom SELECT query: define a type for the result set, and call Query<T>. Neither in SQLite nor in the wrapper did I find a way to discover the signature of the result. It would be nice to have something like the SET FMTONLY from SQL Server. Fortunately I found a description of the results in the official SQLite documentation. Here’s the structure of a SQLite query plan line:

public class QueryPlanLine

{

public int selectid { get; set; }

public int order { get; set; }

public int from { get; set; }

public string detail { get; set; }

}

Here’s a method to log a query plan:

public static void GetQueryPlan(string query)

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

// Get query plan

List<QueryPlanLine> queryPlan = db.Query<QueryPlanLine>(string.Format("explain query plan {0}", query));

foreach (var line in queryPlan)

{

Debug.WriteLine(line.detail);

}

};

}

Here’s the list of different query plans for the four tested strategies: it ranges from a table scan to a covering index:

Achievement unlocked! :-)

Here’s the app again after it went through the different optimizations. It is clearly getting faster at each step:

Just remember that in a busy database the indexes need to be rebuilt/reorganized from time to time. That can be done in SQLite with the REINDEX statement. Here’s how to rebuild the covering index:

public static void ReorganizeCoveringIndex()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

db.Execute("REINDEX `ix_person_name_status`");

}

}

I guess you can build an auto-tuning mechanism to discover slow indexes with the ANALYZE statement, and then rebuild these. But it’s probably easier rebuild the whole database from time to time *and* release disk space whilst doing that. The magic word in SQLite for that is: VACUUM.

public static void RebuildDatabase()

{

using (var db = new SQLiteConnection(DbPath))

{

// Activate Tracing

db.Trace = true;

db.Execute("VACUUM");

}

}

Although SQLite is impressively fast, I was able to make a simple query on a single table more than 5 times faster with just a small effort. I assume that you could achieve even better results on a complex join. You can easily performance tune SQLite queries by creating indexes and/or taking control of the SELECT statement yourself.

Here’s the code, it was written in Visual Studio 2013 for Windows 8.1: U2UC.WinRT.SQLiteIndexing.zip (257.7KB)

Enjoy!

Diederik