First of all, all code is available here.

Locks in .NET

Locks shouls protect critical sections of your code. For some reason only one thread is allowed to execute that code at a certain point in time. Critical sections should be fast and small. However, when it comes to scalability, locks often cause problems. Basically, all your threads have to stand in line and wait to execute that piece of code. So, it is best to avoid locks in such environments. But what if you can't?

Some Code

Consider the following piece of code:

public async Task<IActionResult> NotifyAsync(int paymentid)

{

//get from db

var payment = await db.Payments.FindAsync(paymentid);

//talk to service

var delta = await service.GetPaymentDeltaAsync(payment);

MergePayment(payment, delta);

//store in db

await db.SaveChangesAsync();

return Ok(paymentid);

}

What happens here is that some external provider tells my application that I should update an object in my database. For example, the status of a payment might have been changed. I need to do the following things:

- fetch the object from the database

- call a web service to get the latest values

- store the object in the database

Unfortunately, there is a weird construct here. The web service doesn't reply with all the current value, but only the ones that have been modified since my last call. That means I have to merge what I receive with the object that I have.

In other words, I could have a race condition:

- thread1 reads payment

- thread2 reads payment

- thread1 makes web service call and gets correct delta

- thread1 updates payment

- thread2 makes web service call and gets a delta relative to thread1's updated payment

- thread2 updates payment incorrectly

Of course, the sensible thing to do here is to put these messages on a queue and process then one-by-one. But let's say that that's not an option.

Solutions

I'm using Firefox to simulate a bunch of network calls; we get a nice waterfall view of the result. (I did have to increment the max number of concurrent calls).

I spam the server with the notifications for the following payments:

- first three separate ones: 1,2,3

- then three times 1: 1,1,1

- then three times 2: 2,2,2

- then three times 3: 3,3,3

Messages are sent with an interval of 50ms just to maintain order. The web service takes 1 second to respond which is super slow in comparison.

Let's look at 3 solutions:

- no lock

- lock for all

- lock per id

No Lock

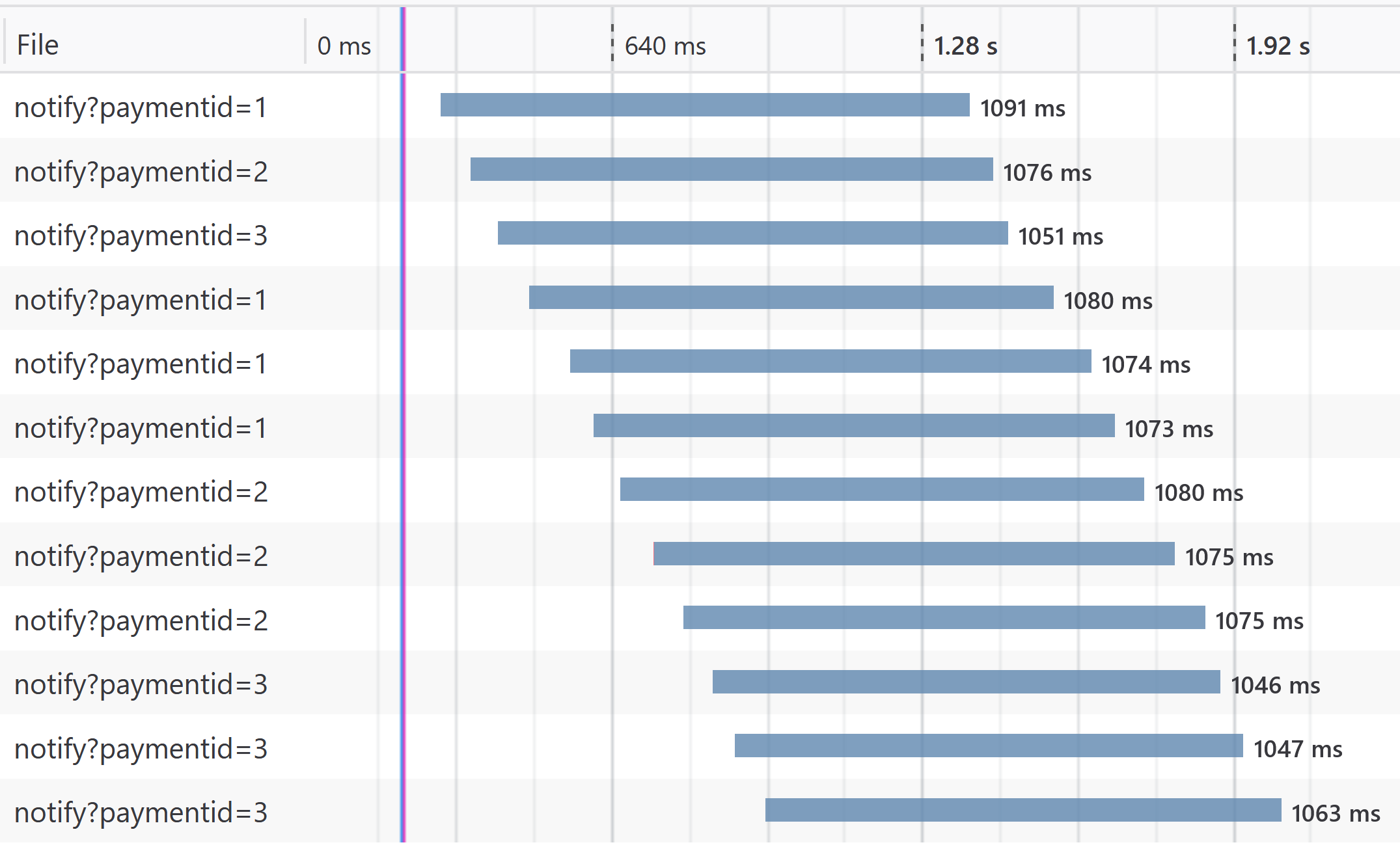

Well this is not a solution of course but let's see the result as a reference:

Every call takes about one second, they all execute simultaneously, and mistakes are bound to happen.

Lock All

The answer to this mess is using a lock. Actually, I'll be using a Semaphore since the lock statement is not allowed over an await statement. This restriction makes sense because critical sections should be small and fast, hence synchronous. But that's not our current situation.

Let's look at the code:

private static SemaphoreSlim semaphore = new SemaphoreSlim(1, 1);

[HttpGet("notifylockall")]

public async Task<IActionResult> NotifyLockAll(int paymentid)

{

await semaphore.WaitAsync();

try

{

//get from db

var payment = await db.Payments.FindAsync(paymentid);

//talk to service

var delta = await service.GetPaymentDeltaAsync(payment);

MergePayment(payment, delta);

//store in db

await db.SaveChangesAsync();

}

finally

{

semaphore.Release();

}

return Ok(paymentid);

}

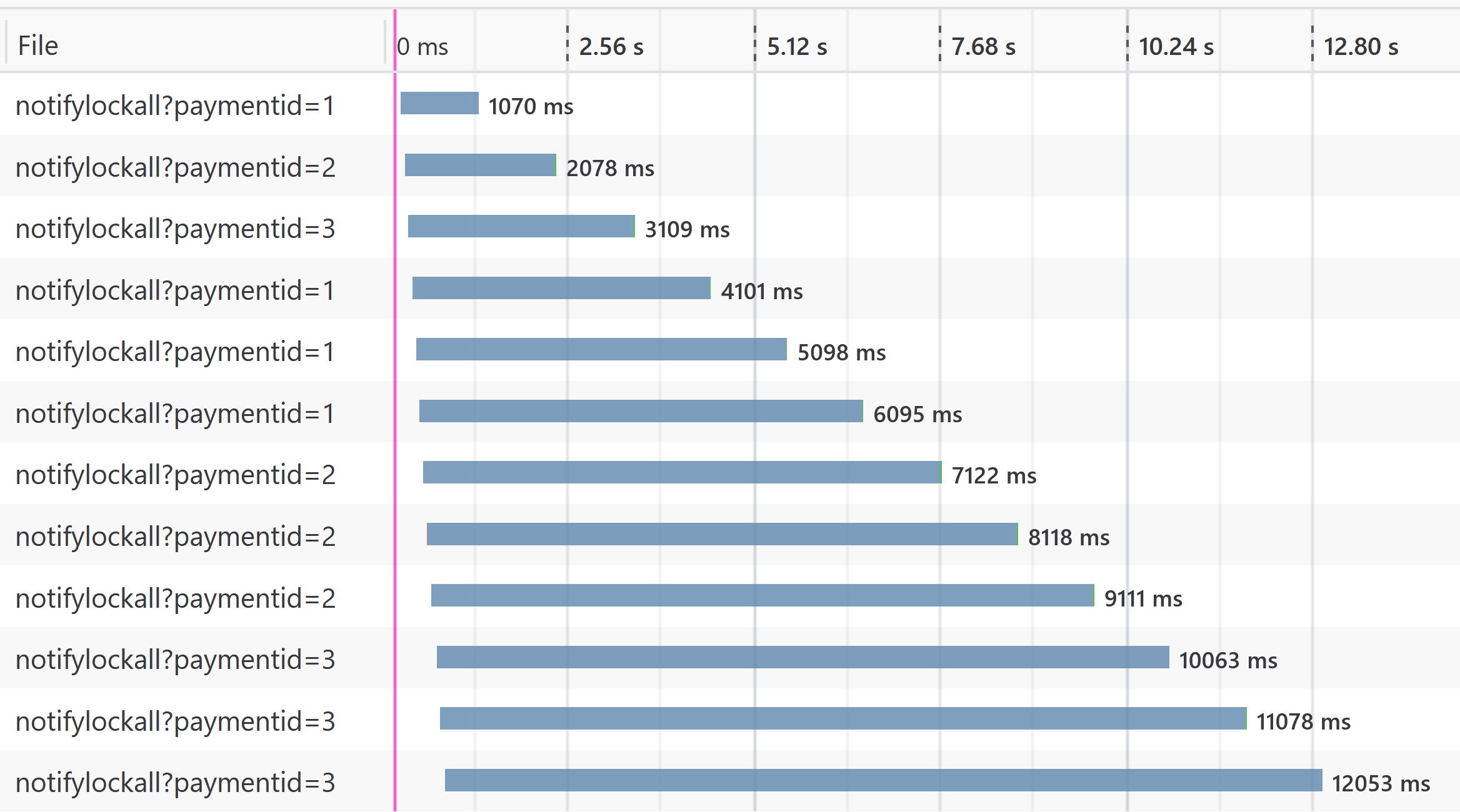

And here are the results:

As you can see the last call takes about 12 seconds. No race conditions will happen, but all calls have to wait for each other to finish. This is not required since payment 1 doesn't have a racing issue with payment 2. This is the enemy of scalability.

Lock Per Id

So, can we lock per id? Yes we can!

That means we will need a semaphore per id. If you have many payments (and we do), this could lead to many semaphores in memory. So, we'll do the following, for every incoming request:

- If no semaphore exists for this id, make one

- If one does exist, use that one

- If nobody is using the semaphore, clean it up

To achive this, I'll be using a WeakValueDictionary<int,SemaphoreSlim>. This keeps a WeakReference to the Semaphore. A WeakReference will not prevent the object from being garbage collected, so unused semaphores will be cleaned up. If the semaphore is used to handle a notification, it has a normal reference and will not be garbage collected.

Here is the implementation:

[HttpGet("notifylockperid")]

public async Task<IActionResult> NotifyLockPerId(int paymentid)

{

var semaphore = GetSemaphoreForPayment(paymentid);

await semaphore.WaitAsync();

try

{

//get from db

var payment = await db.Payments.FindAsync(paymentid);

//talk to service

var delta = await service.GetPaymentDeltaAsync(payment);

MergePayment(payment, delta);

//store in db

await db.SaveChangesAsync();

}

finally

{

semaphore.Release();

}

return Ok(paymentid);

}

//https://www.nuget.org/packages/Weakly/

private static WeakValueDictionary<int, SemaphoreSlim> semaphores

= new WeakValueDictionary<int, SemaphoreSlim>();

private SemaphoreSlim GetSemaphoreForPayment(int paymentId)

{

lock (semaphores)

{

var semaphore = semaphores.GetValueOrDefault(paymentId);

if (semaphore == null)

{

semaphore = new SemaphoreSlim(1, 1);

semaphores.Add(paymentId, semaphore);

}

return semaphore;

}

}

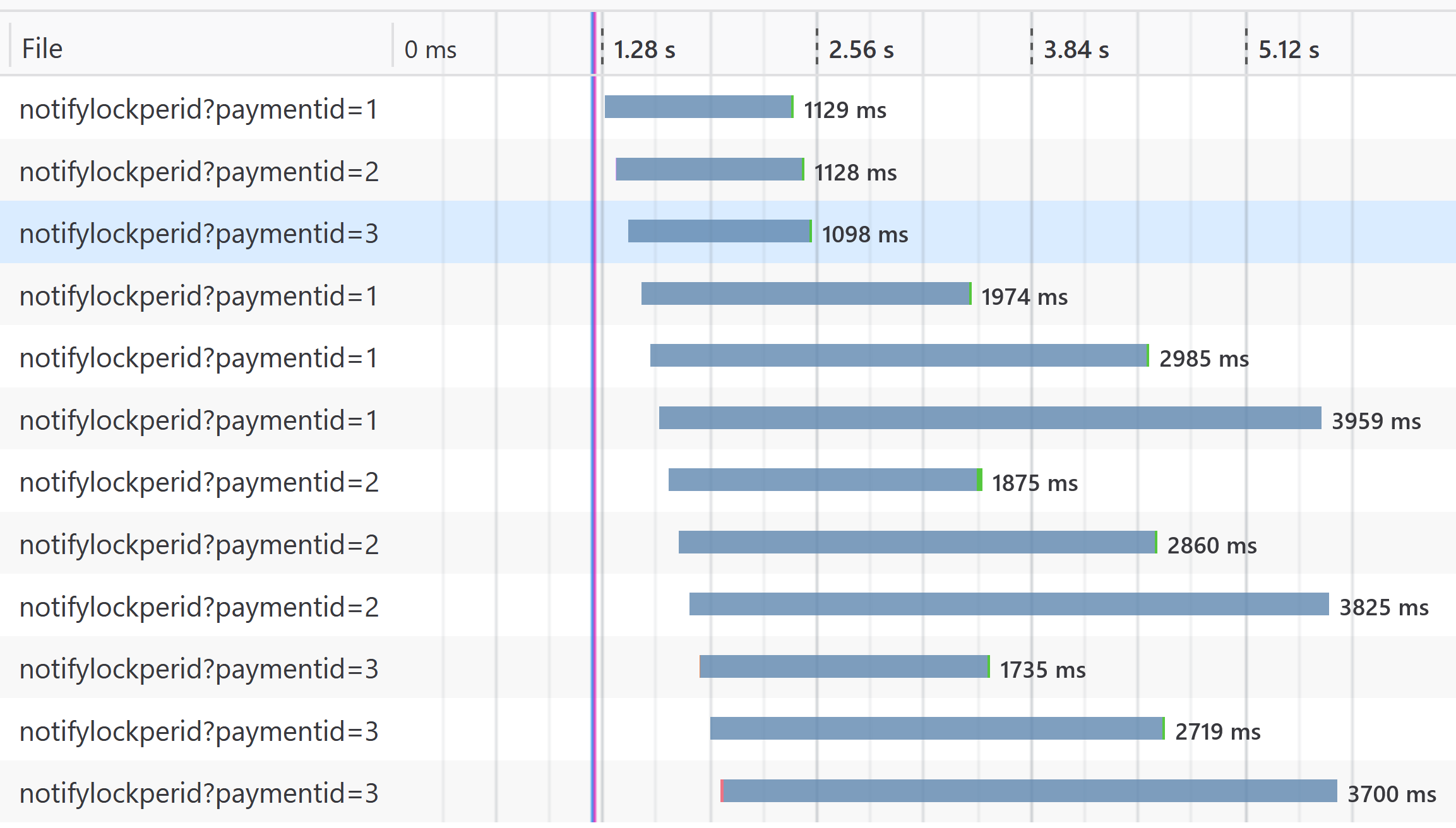

And here is the result:

Notice that the first three calls are handled just as fast as in the no-lock situation. Only when we send more with the same id, then you can see the delay building up.

The longest call is handled within 4 seconds instead of 12. That's a lot better!

One techincal thaught though. Doesn't SemaphoreSlim implement IDisposable? Don't we have to call the Dispose()? It turns out that if you don't use the AvailableWaitHandle property, Dispose() doesn't really do anything for SemaphoreSlim. More information here.

Once again, all code is available here.

Epilogue: Did We Really Solve It?

The observant developer may have realized that This only solves our issue as long as there's only one instance of this application. If we scale-out, we still have to original issue. Instead of having racing thread we could have racing instances.

So, what do we do then? Well, as stated before, then you use a Queue. You put all messages on a single queue and then either:

- Create a single processor to handle the messages on-by-one (same as the 'Lock All' part) or use the same trick by using a semaphore per id. If you scale out, you have the same issue again.

- Have a single-threaded processor per id. Only doable with a limited number of ids.

Doable, but with quite some limitations. The only "real" solution is that the payment web service returns the entire state of the object instead of just the delta. This is a nice example of how poor design can ripple through your entire architecture.