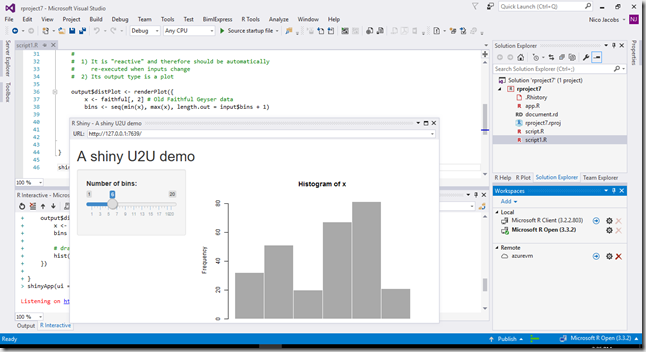

Running R on your local laptop is easy: you just download one of the R distributions (CRAN or Microsoft) and kick off RGui.exe. But if you’re a Microsoft oriented developer or data scientist you are probably familiar with Visual Studio. So you download and install R Tools for Visual Studio (RTVS) and you can happily run r code from within Visual Studio, link this to source control etc.

Remote workspaces in R Tools for Visual Studio

But this decentralized approach can lead to issues:

- What if the volume of data increases? Since R holds its data frames in memory we need at least 20 Gb of memory when working with e.g. an 16 Gb data set. The same remark for CPU power: if the R code runs on a more powerful machine it returns results faster.

- What if the number of RTVS users increases? Do we copy the data to each users laptop? This makes it difficult to manage the different versions of these data sets and increases the risk of data breaches.

This is why RTVS also allows us to run R code from within a local instance of Visual Studio, but it executes on a remote R server, which also holds a central copy of the data sets. This remote server can be an on-premise server, but if the data scientists do not need permanent access to this server it could be cheaper to just spin up an Azure virtual machine.

Setup

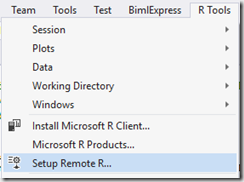

When we click the Setup Remote R… menu in our Visual Studio R Tools it takes us to this webpage, which explains in detail how to setup a remote machine.

Unfortunately this detailed description was not detailed enough for me and I bumped into a few issues. So if you got stuck as well, read on!

Create an Azure VM

Login in the Azure portal, click the + to create a new object and create a Windows Server 2016 Data center virtual machine. Stick to the Resource Manager deployment model and click create.

When configuring the VM you can choose between SSD and HDD disks. The latter are cheaper, the former are faster if you often need to load large data sets. Also pay attention when you select your region: by storing it in the same region as where your data is stored you can save data transfer costs. But also be aware that the cost for a VM is different over the regions. At the time of writing the VM I used is 11% cheaper in West Europe than in North Europe.

In the next tab we must select the machine we want to create. I went for an A8m V2, which delivers 16 8 cores and 64 Gb of RAM at a cost of about 520 euro/month if it runs 24/7.

Azure VM settings

Before I start my machine I change two settings: 1 essential, 1 optional.

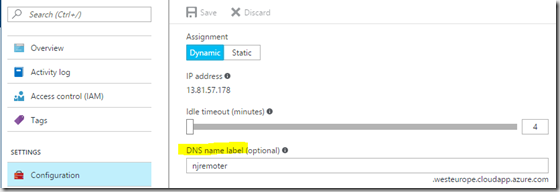

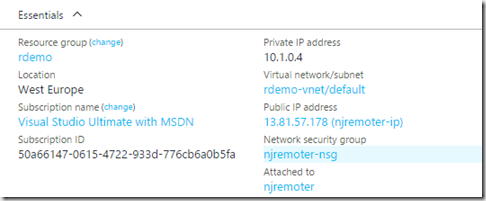

The essential setting is to give the machine a DNS name such that we can keep addressing the machine using a fixed name, even if it got a different IP address after a reboot:

In the essentials window of the Azure virtual machine blade click on the public IP address. This opens up a dialog where we can set an optional (but in our case required) DNS name. I used here the name of the VM I created, don’t know if that’s essential, but it did the job in my case:

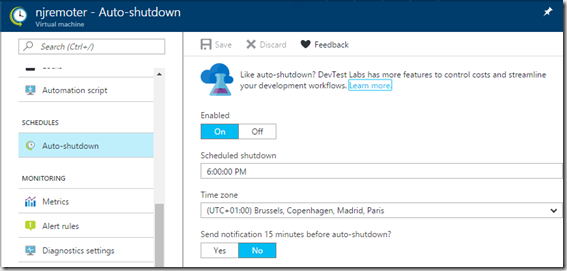

The optional setting is the auto-shutdown option on your VM, which can save you some costs by shutting down the VM when all data scientists are asleep.

Configure the VM

Now we can start the virtual machine, connect via remote desktop and start following the instructions in the manual:

- Create a self-signed certificate. Be sure to use here the DNS name we made in the previous steps. In my example the statement to run from PowerShell would be:

New-SelfSignedCertificate -CertStoreLocation Cert:\LocalMachine\My -DnsName "njremoter.westeurope.cloudapp.azure.com"

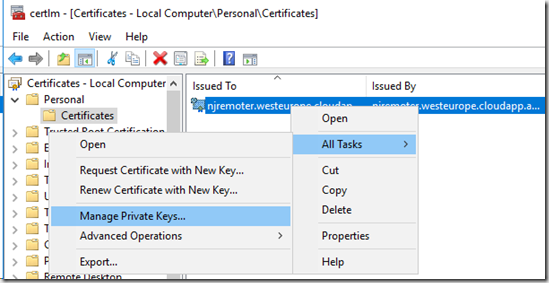

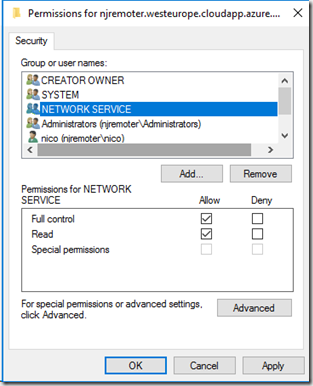

- Grant Full Control and Read permissions on this certificate to the NETWORK SERVICE account using certlm.msc:

- Install the R service using this installer

- Edit the C:\Program Files\R Remote Service for Visual Studio\1.0\Microsoft.R.Host.Broker.Config.json file and point it to the DNS name we used before. Again, on my machine this would be:

{

"server.urls": "https://0.0.0.0:5444",

"security": {

"X509CertificateName": "CN=njremoter.westeurope.cloudapp.azure.com"

}

}

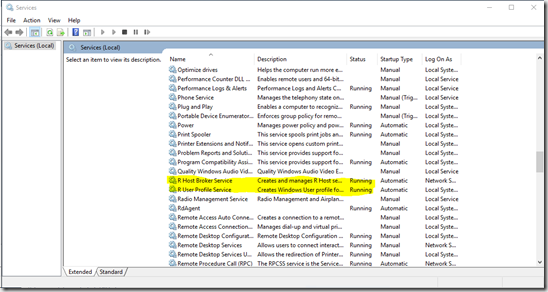

- Restart the virtual machine. Verify that the two R related services start up correctly:

If the services fail to start verify the log file at C:\Windows\ServiceProfiles\NetworkService\AppData\Local\Temp

- Last but not least we must open up port 5444. The R service installer already takes care of that on the windows firewall, but we still need to open up the port at the Azure Firewall. In the Azure portal go to the virtual machine and select Network Interfaces and click the default network interface. In the blade of this interface click the default network security group:

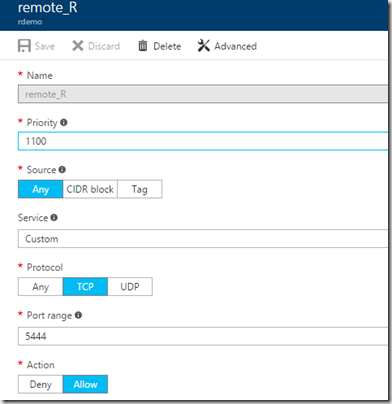

Create a new inbound security rule, allowing access on TCP port 5444. In my example the firewall allows access from all IP addresses, for security reasons you better limit yours using a CIDR block to just the IP addresses of your data scientists

Configuring RTVS

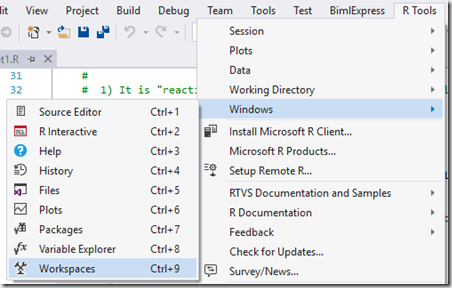

Our server is now configured, last (and easiest) step is to configure our R Tools For Visual Studio. So, on the client machines open up the R Tools Workspaces window:

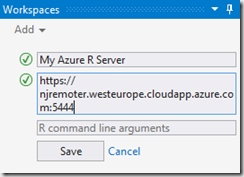

Then click Add and configure the server. On my machine this would be:

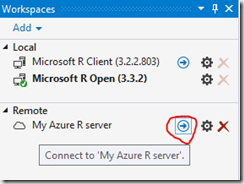

Click Save, then click the blue connect arrow next to our remote connection:

Unless you added the VM to your domain we will now need to provide the credentials of one of the users we made at the server. You also get a prompt to warn you that a self-signed certificate was used, which is less safe:

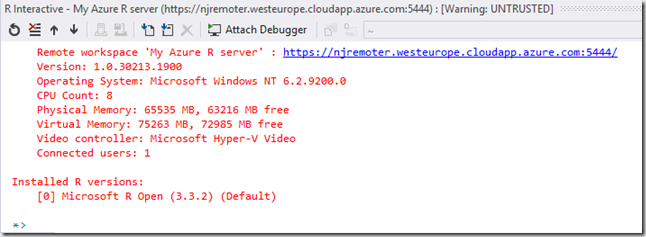

And from now on we are running our R scripts on this remote machine. You can see this amongst others by a change of prompt: *> instead of >

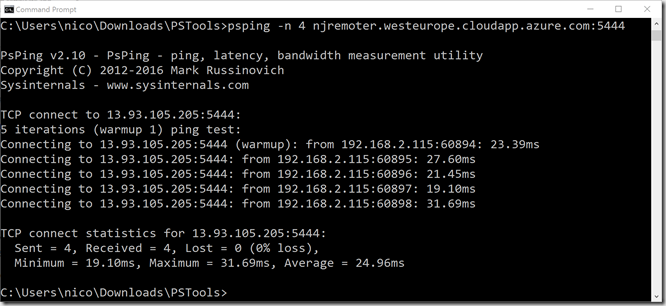

If you have problems connecting to your server, Visual Studio can claim it couldn’t ‘ping’ your server. Don’t try to do a regular ping, since Azure VMs don’t support this. Use e.g. PsPing or similar options to ping a specific port, in our case 5444:

I hope this gets you up to speed with remote R workspaces. If you still experience issues you can always check the github repository for further help.